Papers Explained 246: BROS

The main Transformer structure of BROS is the same as BERT. BROS (BERT Relying On Spatiality) encodes relative positions of texts in 2D space and learns from unlabeled documents with area masking strategy.

The main structure of BROS follows LayoutLM, but there are two critical advances:

- use of spatial encoding metric that describes spatial relations between text blocks

- use of 2D pre-training objective designed for text blocks on 2D space

The way to encode spatial information of text blocks decides how text blocks be aware of their spatial relations. LayoutLM simply encodes absolute x- and y-axis positions to each text blocks but the specific-point encoding is not robust on the minor position changes of text blocks. Instead, BROS employs relative positions between text blocks to explicitly encode spatial relations.

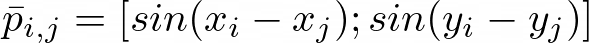

BROS first normalizes all the 2D points of the text blocks using the size of the image. Then, BROS calculates relative positions of the vertices from the same vertices of the other bounding boxes of text blocks and applies sinusoidal functions as:

the relative positions of j th bounding box based on the i th bounding box are represented with the four vectors:

Finally, BROS combines the four relative positions by applying a linear transformation, bbi,j:

BROS directly encodes the spatial relations to the contextualization of text blocks. In detail, it calculates an attention logit combining both semantic and spatial features as follows:

Pretraining

BROS utilizes two pre-training objectives: one is a token-masked LM (TMLM) used in BERT and the other is a novel area-masked LM (AMLM) introduced in this paper.

TMLM randomly masks tokens while keeping their spatial information, and then the model predicts the masked tokens with the clues of spatial information and the other un-masked tokens. The process is identical to MLM of BERT and Masked Visual-Language Model (MVLM) of LayoutLM.

AMLM masks all text blocks allocated in a randomly chosen area. It can be interpreted as a span masking for text blocks in 2D space. Specifically, AMLM consists of the following four steps: (1) randomly selects a text block, (2) identifies an area by expanding the region of the text block, (3) determines text blocks allocated in the area, and (4) masks all tokens of the text blocks and predicts them.

For pre-training, IIT-CDIP Test Collection 1.01, which consists of approximately 11M document images, is used but 400K of RVL-CDIP dataset are excluded following LayoutLM.

Fine Tuning

BROS is finetuned on the following benchmark datasets as the downstream tasks to evaluate the performance

- FUNSD dataset: for form understanding

- CORD dataset: for receipt understanding

- SROIE dataset: for receipt understanding

- SciTSR: for table structure recognition

Paper

BROS: A Pre-trained Language Model Focusing on Text and Layout for Better Key Information Extraction from Documents 2108.04539

Recommended Reading [Document Information Processing]

Hungry for more insights?

Don’t miss out on exploring other fascinating threads in this series. Simply click here and uncover the state-of-the-art research!

Do Subscribe for weekly updates!!